Technology 101 – Everything about the Tech Evolution

Technology 101 – Everything you need to know about the Tech Evolution

The early computers like the ENIAC was 2-6 feet wide, 2 feet deep and 8 feet high. With all the other paraphernalia they needed a room to be housed in. In fact, the word “debugging” started when a moth got stuck on a computer part causing errors.

We have come a long way since then. Computers are made up of transistors. Transistors are the fundamental building blocks of any electronic device. In 1965, Gordon Moore, co-founder of Intel, wrote a paper predicting that the number transistors in a dense circuit will double every two years. This is known as Moore’s Law and has had a deep impact on technology – it meant transistors kept getting smaller and so did computers. And not only did computers keep getting smaller but they also kept getting more powerful and cheaper at the same time. The cost of the ENIAC that you see in the picture above was $6,000,000. The cellphone in your hand costs 17,000 times less, is 40,000,000 times smaller, uses 400,000 times less power, is 120,000 times lighter and 1,300 times more powerful. Take a moment and comprehend the magnitude of this change.

This has led to computers becoming all pervasive. Before Microsoft’s – “Computer on Every Desktop” dream gets realised, we will realise the “Computer in Every Hand” dream. According to GSMA data, 2/3 of the world population is now connected on mobile phones. Connected is the important word. This brings us to the next revolution. The network revolution or the Internet. The first message sent between two computers was in 1969, as a part of ARPANET, started by the US Military to connect radar systems. In 1970, the TCP/IP protocol was developed. This standardised the way information was exchanged between computer systems. Commercial ISPs or Internet Service Providers started in the 1980s. This set the platform for Tim Berners Lee to implement the HTTP protocol that allows exchange of information in a scale-able manner, in 1989 at CERN. This lead to the Web Browser and HTML that enabled users to view documents with hyperlinks, which when clicked would use the HTTP protocol to get information from other computers and show it to the user in a WYSIWIG (What You See Is What You Get) manner.

The first page on the internet (here) happened at CERN. In 1994, W3C or the World Wide Web Consortium at MIT was established and so the Web was born. You can read more about the Browser Wars between Netscape and Microsoft here. The dam broke open – Google came along to solve how to search for information on the internet, then came Amazon to show how we can buy anything from anywhere and Apple – “Hey what music do you want to listen to?”, Facebook – and the gang of social media companies. To summarise – computers are getting smaller, cheaper and connected. They are embedding themselves into every aspect of our lives and reshaping society & businesses – how we exchange information, how we store memories, how we buy things, how we work and more is yet to come.

Businesses in the 70s and 80s were already using computers in the form of desktops and servers installed in their data centres. The applications were mostly to exchange information between employees. Most of the custom-developed applications were then replaced with software packages like ERP, Finance and HR from SAP and Oracle. As the need for information and information processing applications rose so did the size of these data centres. Most businesses started investing huge sums of money into procuring hardware. With the internet boom, customer interactions started going digital – website, mobile, IVR, Email, etc. There was a massive explosion of digital information and transactions. This led to businesses further investing in large data centres. A big challenge was emerging – procurement of new machines was taking 3-4 months, projects were getting delayed. At the same time, the utilisation of machines were abysmal – 5 to 7%. Energy charges were getting steeper. Hardware was getting obsolete quickly. Downtimes were increasing. This was not acceptable in the 24*7 world.

In 2006, Amazon created a subsidiary called Amazon Web Services and introduced Elastic Compute (EC2). This was followed by Google introducing their cloud in 2008. Then Microsoft launched Azure in 2010. Hardware Virtualisation is one of the key component that enables cloud providers to create a virtual layer above the physical layer of connected computers. This enabled multiple operating system instances to run on top of the same hardware instance. Thus enabling dynamic allocation of hardware, scaling and elasticity. So, instead of waiting for days to add a new hardware today we can add hardware on click of a button. Cloud enables much more than just that – easy management, deployment, reliability, etc. The same technologies can be used in large data centres (known as Private Clouds) but the economies of scale for large cloud providers are completely different. Clearly, cloud has many advantages over owning a data centre. Companies like ours wouldn’t exist if cloud wasn’t available. But the debate between cloud and on-premise probably will never be settled. Most large organisations are adopting cloud and have a hybrid model where their core systems continue to run on-premise, but they leverage the cloud for new age applications like collaboration, analytics, etc. From a CFO perspective, cloud is great because this converts fixed capital investments to flexible operational costs.

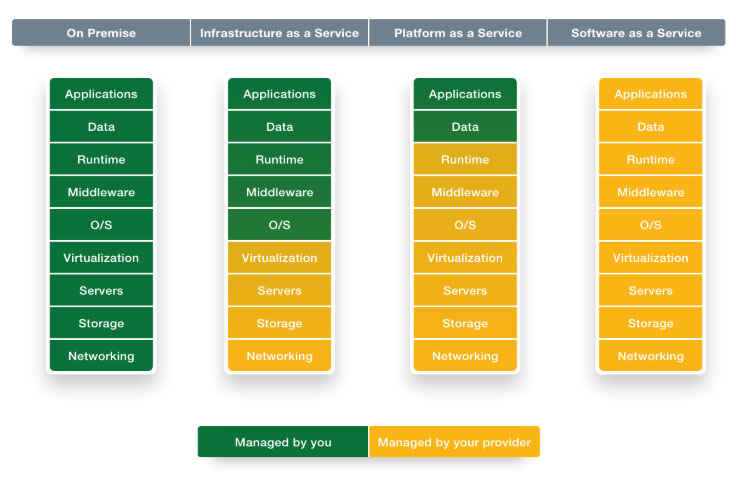

On-premise IT infrastructure v/s cloud services

On-premise IT infrastructure v/s cloud services

Cloud providers did not stop by just offering hardware virtualisation (Infrastructure as a Service or IaaS), they then started offering managed operating systems, security services, etc. and expanded into what is called Platform as a Service (PaaS). Availability of these enabled companies to build and deploy software applications which can be used by multiple companies (Software as a Service or SaaS). Some SaaS providers grew so big that they provided the entire stack – IaaS, PaaS and SaaS, e.g. Salesforce. Broadly, AWS started as a IaaS provider, Microsoft Azure and Google started as PaaS providers and SalesForce started as a SaaS provider. But now they are entering each other’s space. Infrastructure providers price based on storage (how much data you store), compute (how many business rules you run), IOPS (how much data you access), network usage (how much data flows in and out of the system) and other stuff. Which is why PaaS and SaaS providers charge for number of years of data customer want to keep, volume of server calls they make, volume of data/reports they download, number of users who access the system and so on.